How can we reduce AI risk?

In the last post of this series, I finish the RAMO AI risk acronym with the final AI risk, organizational risk. I’m going to differentiate between a complex problem and a wicked problem and share what we can all do to minimize AI risk for ourselves and organizations.

Organizational Risk

Even without an AI Race, no malicious actors involved, no AI going rogue, there is still organizational risk because accidents simply happen. Building a culture that emphasizes safety and rigorous testing is needed to reduce accidents. Having a security mindset, a questioning attitude and exercises where the team imagines what can go wrong in disaster planning will help.

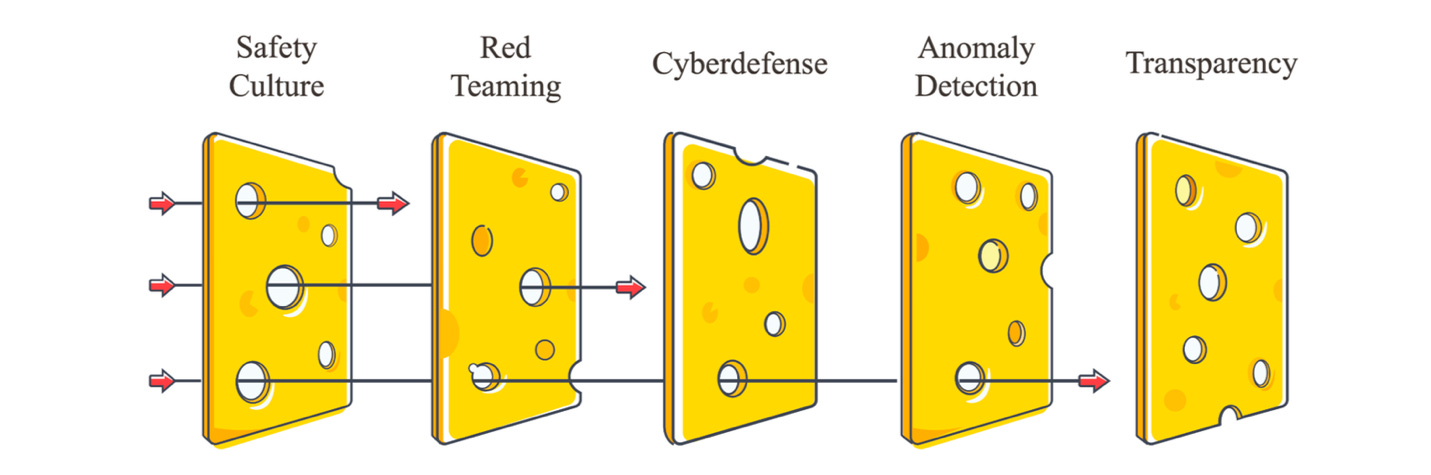

The Swiss Cheese Model of Defense[i]

The Swiss Cheese Model of Defense is a way of understanding how accidents or harmful outcomes can occur even in systems with multiple safeguards in place. Developed by psychologist James Reason in the 1990s, the model imagines each safeguard as a slice of Swiss cheese. Every slice represents a different layer of defense: this could be a safety policy, a technical measure, a training program, or an oversight process. No layer is perfect, so each slice of cheese has holes. These holes symbolize weaknesses, mistakes, or design flaws in that defense. On their own, a single hole doesn’t cause disaster because the other slices can still block the threat. But if the holes in several slices happen to line up, the hazard can pass straight through all the defenses, resulting in an accident or failure.

The strength of the model lies in showing that safety depends not on a single perfect barrier, but on a series of independent protections that fail for different reasons. In the context of AI safety, the slices might include alignment research, careful dataset vetting, red-teaming to uncover weaknesses, strict access controls, regulatory oversight, and strong incident response systems. A major AI failure would require flaws in several of these defenses to coincide, an unsafe dataset, overlooked vulnerabilities in testing, weak regulation, and ineffective oversight all aligning at once.

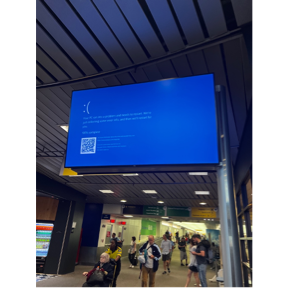

The CrowdStrike outage of July 2024 stands as a vivid example of organizational risk in the age of automated systems. It began as a routine update. CrowdStrike, a cybersecurity firm used by countless enterprises and governments, pushed a patch to its Falcon sensor software. Within hours, millions of Windows machines across the globe began crashing.

I took this photo at a US airport. Remember this day?

Hospitals lost access to patient records. Airports grounded flights. Newsrooms went dark. Even emergency services were paralyzed in some regions. By the end of the day, more than 8.5 million systems had been knocked offline, including those belonging to Fortune 500 companies, airlines, banks, and public agencies. The root cause wasn’t a hack, nor was it a failure of the AI itself. It was a misconfigured update, a logic bug in a single file. Yet the impact was sweeping. The fallout wasn’t limited to IT departments; it disrupted global commerce, travel, and infrastructure. All of it traced back to one software vendor, and one overlooked error.

It's Wicked (Not the Broadway!)

What often happens is all four things sometimes can be jumbled together. Due to an AI race between companies, something untethered can be released prematurely, a malicious actor can cause harm, leading to a Rogue AI and organizationally, it is hard to curb the damage. Like most catastrophes, it’s hard to put one party in place for the damage. When a pandemic happens, for instance, was it the hospitals’ fault that they didn’t practice redundancy, or the government’s fault for lack of preparedness, the people’s fault for not vaccinating? Or all of the above? Like most social problems, or what we formally call, complex problems, there are too many parties at play. Society is this big messy thing with so many actors and such different factions of opinions. It’s difficult to align. This is also why we call AI safety a wicked problem, which is to be differentiated from a complex problem.

Complex problems are difficult but ultimately solvable. They may have many variables, require sophisticated approaches, and take significant time and resources, but they have clear definitions and boundaries, measurable success criteria, and solutions that can be tested and refined. There's a path toward resolution, even if it's challenging. Think of landing a rover on Mars, developing a new vaccine, or designing a more efficient engine. These are complex but "tame" problems that yield to systematic approaches and engineering solutions.

Wicked problems, as originally defined by design theorists Horst Rittel and Melvin Webber in 1973[ii], are fundamentally different. Unlike the "tame" problems of mathematics and chess, wicked problems lack clarity in both their aims and solutions. Wicked problems are those that are complex, open-ended and unpredictable, and critically, the symptoms of the problem have also become causes of the problem.

What makes problems "wicked" is that there is no definitive formula for a wicked problem and wicked problems have no stopping rule: there's no way to know whether your solution is final. Moreover, solutions to wicked problems are not true or false (right or wrong) but rather better or worse. Every problem is essentially unique, and every wicked problem is actually a symptom of another problem, creating endless recursive complexity.

AI safety exemplifies a wicked problem because we can't clearly define what "safe AI" means, it depends on values, context, and use cases that vary across cultures and applications. Solutions create new problems, as safety measures might slow beneficial progress or introduce unforeseen vulnerabilities. Any wrong or mistimed solution makes the problem worse, and the problem itself evolves as AI capabilities advance. There's no clear endpoint where we can declare victory and say "we've solved AI safety."

This distinction explains why AI safety can't be approached like a traditional engineering problem. Instead of seeking a definitive solution, we must embrace ongoing adaptation, continuous stakeholder engagement, and accept that we're managing an evolving challenge rather than solving a discrete problem. The wicked nature of AI safety means we need governance frameworks, ethical considerations, and adaptive strategies that can evolve alongside the technology itself.

AI Safety Needs More Attention

If just one engineer at Boeing came forward and said, 'There’s a 1% chance this plane might fall out of the sky,' it would ground the fleet. But with AI, you have nearly every top AI engineer saying there's a significant risk, some say even existential risk, and yet society continues full speed ahead. Why is that?

Perhaps the difference between Boeing and AI lies in immediacy and visibility. When a plane crashes, the consequences are immediate, tragic, and undeniably linked to the technology. With AI risks, the potential harms, while potentially far greater, remain largely abstract to most people. We can't point to a smoking crater and say "AI did this." The risks unfold gradually, through algorithmic bias, economic displacement, the erosion of democratic discourse, or scenarios we haven't yet imagined.

The answer isn't to abandon AI development entirely; that would be both impractical and potentially counterproductive. AI technologies are already delivering significant benefits in healthcare, scientific research, education, and countless other domains. The real challenge lies in bridging the gap between our current breakneck pace and the measured caution that such powerful technology demands. Instead, we need a middle path: continuing to harness AI's benefits while implementing robust safeguards, transparency measures, and democratic oversight. We need the same engineering rigor and safety culture that eventually made aviation one of the safest forms of travel.

What can we do about it?

The following are safety design principles that we can adhere to reduce risk in AI.

Redundancy

This is also known as no single point of failure. I remember when in previous jobs, I would help with a customer’s network design, we often had to design networks with no single point of failure. If you were to buy switches you had to have bought two. If you were buying a storage system, you must have a back up. Malfunctions happen. And the way to avoid a disaster is if we architect redundancy.

That means, if we're deploying an AI system for medical diagnosis, we wouldn't rely solely on the AI's output. We'd have redundant checks including human physician oversight, secondary AI systems trained on different data, and independent validation protocols. If any one component fails or is compromised, the others can still catch dangerous errors.

Separation of Duties

This principle becomes particularly crucial as AI systems become more powerful and integrated into critical infrastructure. Think about how we structure human institutions to prevent abuse of power. No single person in a democracy can declare war, change laws, and execute those laws. Similarly, we shouldn't design AI systems where one component has authority over multiple critical functions.

In practice, this means separating the AI system that makes decisions from the system that implements those decisions, and both from the system that monitors their performance. An AI trading system, for example, might have one component that analyzes market data, another that makes trading recommendations, a third that executes trades, and a fourth that monitors for unusual patterns or potential manipulation.

Principle of Least Privilege

This is where each AI system is designed with minimal power to complete the task, not giving any more capability to a narrow system.

Think of this like user permissions on a computer system. You wouldn't give every user administrator privileges because that increases the potential for both accidental damage and malicious abuse. Similarly, an AI system designed to recommend movies shouldn't have access to financial data, and an AI system managing logistics shouldn't have the ability to modify its own code.

This is why AGI matters so much. When an AI system becomes more general, it is more capable and harder to control.

Antifragility

In his book, Antifragile[iii], Nassim Nicholas Taleb introduces three vivid metaphors to represent how systems respond to stress: the Sword of Damocles, the Phoenix, and the Hydra. Each one captures a distinct relationship to volatility, disorder, and time.

The Sword of Damocles represents fragility. In the Greek myth, Damocles is allowed to enjoy the luxury of a king's throne, but above his head hangs a sharp sword suspended by a single horsehair. It only takes one small shake for the entire system to collapse. In Taleb’s world, this is the type of person, company, or society that seems stable on the surface but is actually at the mercy of a single disruption, a black swan event, a sudden downturn, a hidden weakness. Fragile systems fear time, because over time, the chances of that sword falling only increase.

The Phoenix, by contrast, is robust. When burned to ashes, it rises again, exactly the same as before. It doesn't break, but it doesn't improve either. This mythological bird represents things that can survive stress but don't benefit from it. They're durable, but not dynamic. Think of a well-armored institution or a toughened material, it can withstand a blow, but it doesn't grow from the experience. Robustness is admirable, but it’s ultimately static. It resists time and chaos without changing.

Then comes the Hydra, the ultimate symbol of antifragility. In the myth, when one head of the Hydra is cut off, two grow back in its place. This creature doesn’t just survive harm, it benefits from it. Attempts to hurt it only make it stronger. In Taleb's framework, antifragile systems thrive on randomness, stress, and chaos. They adapt, mutate, and evolve. This is the startup that pivots and finds success after a failed product. The person who uses failure as fuel. The immune system that strengthens after exposure to pathogens. Antifragility means loving the volatility that others fear.

An antifragile AI safety system might work like this: when an AI system encounters a novel situation that its safety protocols don't cover, rather than just flagging it for human review, the system uses that encounter to strengthen its safety protocols. It learns not just what went wrong, but what classes of similar problems might exist, and proactively develops defenses against them.

Transparency

Model interpretability remains one of the most challenging aspects of AI safety. We're often in the uncomfortable position of deploying systems whose decision-making processes we don't fully understand. This is like having a brilliant employee who consistently makes good decisions but can never explain their reasoning.

Transparency in AI requires multiple approaches working together. We need better technical tools for understanding how models make decisions, clearer documentation of training processes and data sources, and more accessible explanations of AI system capabilities and limitations for non-technical stakeholders.

The challenge is balancing transparency with competitive concerns and potential misuse. Making AI systems completely transparent could enable bad actors to better exploit their weaknesses, creating a tension between safety and security.

Applying these principles to you

Sometimes those safety design principles can feel like they only apply to AI engineers, but in reality, these principles start with everyone one of us. So what if you were an average knowledge worker? How should you apply these principles in your everyday work?

On the redundancy front, instead of thinking about backup servers, consider how you validate important information or decisions. When you're researching a topic for a presentation, do you rely on a single source, or do you cross-check information across multiple sources? When you're using AI to help write a report, do you fact-check its claims independently? This is redundancy.

When it comes to Separation of Duties, think about how this applies to your workflow with AI tools. The person who generates content using AI shouldn't be the same person who reviews it for accuracy and appropriateness. The person who sets up automated processes shouldn't be the only one monitoring their outputs.

Consider a practical scenario: you're a financial analyst using AI to help identify investment opportunities. The separation of duties principle suggests that you shouldn't be the person who both runs the AI analysis and makes the final investment recommendations without oversight. Maybe you generate the analysis, but a senior colleague reviews the AI's reasoning and a compliance officer checks that recommendations meet regulatory requirements.

What about Principle of Least Privilege? It means whenever you’re using a task not to cede over more information than you need. Since the release of ChatGPT Agent, many of us are playing with the Connectors function. You don’t need to connect your entire database. Remember to shut things off as needed.

How can you become more Antifragile? After any incident, run a quick post-mortem. Ask what went wrong, how it could have been worse, and what small change would prevent a repeat. Store the lesson in a shared “Ops Notebook” so every mistake permanently strengthens the workflow rather than just being patched over.

Document what you do and why while you work to practice transparency. Simple habits such as adding short “why I chose this formula” comments in spreadsheets or maintaining a running project log make your process intelligible to future you and to colleagues who inherit your files. The clearer the workings, the easier it is to spot errors and hand work off safely.

AI is like fire

The myth of Prometheus ends not with his punishment, but with his eventual liberation. In some versions of the story, Hercules frees him from his eternal torment, suggesting that even the gods recognized the necessity of the gift he gave humanity. But the deeper truth of the myth isn't about punishment or freedom, it's about responsibility. Prometheus didn't just steal fire; he made a choice to give humanity both extraordinary power and the burden of using it wisely.

We stand at our own Promethean moment. The fire of artificial intelligence burns brighter each day, offering unprecedented capabilities to cure diseases, solve climate change, and unlock human potential in ways we can barely imagine. But like our ancestors who first gathered around those flames, we must learn not just to harness this power, but to live responsibly with it.

The safety principles we've explored, redundancy, transparency, antifragility, aren't constraints on innovation. They're the wisdom accumulated from every previous Promethean gift, from fire to nuclear power to the internet. They're how we honor both the promise and the peril inherent in transformative technology.

The eagle that tormented Prometheus was sent by Zeus, but the real torment wasn't the daily punishment, it was the knowledge that his gift could be used for both creation and destruction, and that he couldn't control which humanity would choose. We don't have that luxury of helplessness. Unlike Prometheus, we're not chained to a rock. We're the ones making the choices, every day, about how to develop, deploy, and govern AI systems.

The myth reminds us that the most dangerous moment isn't when we discover fire, it's when we forget that it can burn. Our task isn't to return this gift to the gods, but to prove ourselves worthy of it. Start with one safety principle. Build the culture of responsibility that this moment demands.

[i] Hendrycks D. Safe Design Principles. In: Introduction to AI Safety, Ethics and Society. Taylor & Francis; 2024. Accessed August 6, 2025.

[ii] Rittel H W J , Webber M M. What’s a Wicked Problem? In: Wicked Problem. Stony Brook University; accessed August 6, 2025.

[iii] Taleb NN. Antifragile: Things That Gain from Disorder. New York (NY): Random House; 2012.